Artificial Intelligence is expected to create trillions of dollars of value across the economy. But as the technology becomes a part of our daily lives, many people are still skeptical. Their main concern is that many AI solutions work like black boxes and seem to magically generate insights without explanation.

Under the slogan “Build AI with Humans-in-the-Loop” the SEMANTiCS Conference, taking place in Austin, Texas, from 21 to 23 April 2020, will address the issue by promoting innovative approaches to building AI applications. With the goal of making AI inherently explainable and more transparent, the conference promises to convince the US market to focus on building AI applications that people can trust.

Andreas Blumauer, Founder and CEO at Semantic Web Company, one of the conference’s main organizers, explains how the rise of Semantic AI promises to overcome the barriers between humans and machines by making them work together.

Why does Artificial Intelligence often work like a black box? And why does that fuel skepticism?

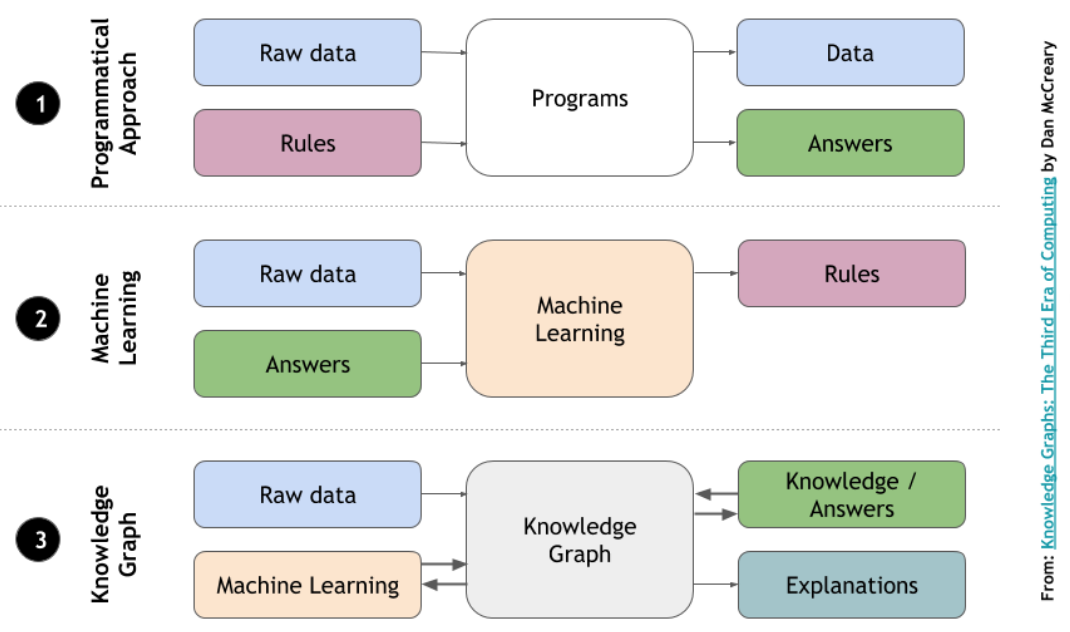

The promise of the AI, which is based on algorithms of machine learning such as deep learning, is to automatically extract patterns and rules from large data sets. This works very well for specific problems and in many cases helps to automate classification tasks. Why exactly things are classified in one way or another cannot be explained, because Machine Learning cannot extract causalities, it cannot reflect on why which rules are extracted.

Machine Learning algorithms learn from historical data, but cannot derive new insights from it. In an increasingly dynamic environment, this is causing skepticism because the whole approach of deep learning is based on the assumption that there will always be enough data to learn from. In some industries, such as finance and healthcare, it is becoming increasingly important to implement AI systems that make their decisions explainable and transparent, incorporating new conditions and regulatory frameworks quickly.

Can we build AI applications that can be trusted?

There is no trust without explainability. Explainability means that there are other trustworthy agents in the system who will understand and can explain decisions made by the AI agent. Eventually, this will be regulated by authorities, but for the time being there is no other option than making decisions made by AI more transparent. Unfortunately, it's in the nature of some of the most popular Machine Learning algorithms that their calculated rules basis cannot be explained, they are just “a matter of fact.”

The only way out of this dilemma is a fundamental re-engineering of the underlying architecture, which includes Knowledge Graphs as a prerequisite to calculate not only rules but also corresponding explanations.

What is Semantic AI and what makes it different?

Semantic AI fuses symbolic and statistical AI. It combines methods from Machine Learning, Knowledge Modeling, Natural Language Processing, Text Mining, and the Semantic Web. It combines the advantages of both AI strategies, mainly semantic reasoning and neural networks. In short, Semantic AI is not an alternative, but an extension of what is currently mainly used to build AI-based systems. This brings not only strategic options, but also an immediate advantage: faster learning from less training data, for example to overcome the so-called cold start problem when developing chatbots.

And Semantic AI introduces a fundamentally different methodology and thus additional stakeholders with complementary skills. While traditional Machine Learning is mainly done by Data Scientists, Knowledge Scientists are the ones who are involved in Semantic AI or Explainable AI. What is the difference?

At the core of the problem, data scientists spend more than 50 percent of their time collecting and processing uncontrolled digital data before they can be explored for useful nuggets. Many of these efforts focus on building flat files with unrelated data. Once the features are generated, they begin to lose their relationship to the real world.

An alternative approach is to develop tools for analysts to directly access an enterprise Knowledge Graph to extract a subset of data that can be quickly transformed into structures for analysis. The results of the analyses themselves can then be reused to enrich the Knowledge Graph.

The Semantic AI approach thus creates a continuous cycle of which both Machine Learning and Knowledge Scientists are an integral part. Knowledge Graphs serve as an interface in between, providing high-quality linked and normalized data.

Does this new approach to AI lead to better results?

Apart from its potential to generate trustworthy and broadly accepted Explainable AI based on Knowledge Graphs, the use of Knowledge Graphs together with semantically enriched and linked data to train Machine Learning algorithms has many other advantages.

This approach leads to results with sufficient accuracy even with sparse training data, which is especially helpful in the cold start phase, when the algorithm cannot yet draw inferences from the data because it has not yet gathered enough information. It also leads to better reusability of training data sets, which helps to save costs during data preparation. In addition, it complements existing training data with background knowledge that can quickly lead to richer training data through automated reasoning and can also help avoid the extraction of fundamentally wrong rules in a particular domain.

I have a master’s in Business Informatics and am passionate about Knowledge Graphs, Semantic Technologies, and Machine Learning. I founded the Semantic Web Company 15 years ago and since then we have helped over 100 companies use Semantic Web-based methods and techniques. Our Semantic AI platform, PoolParty Semantic Suite, is one of the leading technologies in graph and metadata management, text mining, and advanced data analytics. Our customers are Fortune 500 companies looking for better ways to establish collaborative workflows and new data strategies that solve all the problems related to data silos, data quality, information retrieval, and data integration. I am confident that knowledge graphs have the potential to change not just organizations, but the world because they are capable of nothing more than connecting data, knowledge, and people so we can provide answers to small, large, and even global problems.